-

On this page

1.0 Survey design

1503 interviews were conducted using Computer Assisted Telephone Interviewing between 11 July and 7 August 2007. Respondents were chosen at random from household telephone numbers listed in the electronic White Pages. Quotas were placed on the sample by location, age and sex to ensure that sufficient interviews were conducted with representatives of these groups to allow robust analysis. The data was then weighted to reflect the Australian adult resident population as measured in the 2006 Australian Bureau of Statistics population Census.

In addition to the main study, a Verification Study was conducted in which three questions from the main study were asked on the NewsPoll Omnibus. The NewsPoll Omnibus was selected because the sample structure closely reflected the sample structure of the main survey. Interviewing occurred over the period 3 to 7 August 2007.

1.1 Questionnaire design

The questionnaire was adapted from previous community attitudes surveys conducted in 2001 and 2004[1]. New modules on identity fraud and theft and closed-circuit television were added to the 2007 survey. These were developed jointly by Wallis Consulting Group (Wallis) and the Office of the Privacy Commissioner (the Office). The survey contained the following question modules:

- General Attitudes to Providing Personal Information

- Trust in Organisations Handling Personal Information

- Level of Knowledge

- Privacy and Government

- Privacy and Business

- Privacy and Health Information

- Privacy and The Workplace

- Identity Fraud and Theft

- Closed Circuit Television

- Demographics

In addition to these modules there was an introductory section which contained a brief statement of the purpose of the survey and contact details for the Office, Wallis and the Australian Market and Social Research Society’s (AMSRS) survey line. This section also contained questions screening respondents for age and gender.

The following changes were made to the 2004 questionnaire for the purposes of the 2007 study:

Trust in Organisations Handling Personal Information – respondents were no longer asked how trustworthy they thought mail order companies were, instead they were asked about insurance companies.

Level of Knowledge – The question concerning which activities contravene the Act was completely different from 2004. Respondents were not asked to rate how much they thought they knew about protecting their personal information or to provide their views on organisations’ general privacy policies.

Privacy and Business – The questions concerning the use of the Electoral Roll and White Pages by business were moved into this section.

Privacy and Health Information – Respondents were no longer asked whether they supported the idea of a unique identifier for health services. Instead they were asked about their support for a National Health database and, in addition, to suggest the circumstances under which it would be appropriate for a doctor to inform a relative of a person with a genetic illness that the person has the illness.

Privacy and the Workplace – Responses to the statement about randomly drug testing employees were different and a multiple categories response was allowed. In 2004 one possible response was only if necessary to ensure safety and security, this was changed in 2007 to only if they suspect wrongdoing.

Demographics – The narrow income bands that respondents had been asked to respond to in the past (leading to a 42% refusal rate) were reduced to four broad bands with a resultant fall in refusal rate to 19%. The income bands were reported slightly differently in 2007 to 2004, as a result, with households earning less than $25 000 roughly coinciding with those relying heavily on government benefits.

The question ascertaining respondents’ education levels had a slightly different response frame, offering less detail about the level of education completed up to Year 12 and including a post-graduate level of qualification.

Although the question about respondents’ occupations was asked in exactly the same fashion as in previous surveys, a different approach to coding the responses was taken. Where interviewers coded the responses in previous surveys, in 2007 interviewers recorded occupation verbatim and responses were coded by a specialist coding team, therefore taking the onus off the interviewer.

The questionnaire was set up on Wallis’ system and timed by interviewers. Their initial uninterrupted estimate of the length of the questionnaire was approximately 20 minutes, although there were concerns that this might be an underestimate. The individual question modules were timed and their order was changed to enhance flow and comprehension (as well as speed). Nonetheless, the pilot study revealed that 20 minutes was an underestimate, with an average time of just under 31 minutes being achieved. While it is common for pilot study interviews to run longer than when a survey goes live because interviewers are still familiarising themselves with the study and dealing with questions that respondents may have, as well as noting any problems or wording difficulties so that these may be improved, it was clear that this questionnaire could not run for 20 minutes as planned. The pilot showed that one of the key reasons that the interview was running longer than estimated was the interest respondents displayed in the subject matter. This meant those agreeing to interview were happy to deliberate on their responses to an extent unforeseen by our interviewing team in practice runs.

At the time of the pilot the question modules were timed as follows:

- Introduction – including screening and respondent selection – 1.5 minutes

- General Attitudes to Providing Personal Information – 2 minutes

- Trust in Organisations Handling Personal Information – 2 minutes

- Level of Knowledge – 2 minutes

- Privacy and Government – 1.5 minutes

- Privacy and Business – 1.5 minutes

- Privacy and Health Information – 4 minutes

- Privacy and the Workplace – 2.5 minutes

- Identity Fraud and Theft – 2 minutes

- Closed Circuit Television – 1.5 minutes

Wallis suggested several strategies for shortening the questionnaire including modularising the questionnaire so that all respondents would be asked 20 minutes’ of questions. The Office decided to run the longer questionnaire and to change the introductory script accordingly to give respondents an accurate estimate of the time the interview would take to complete – in keeping the AMSRS Code of Professional Behaviour.

1.2 Sample design and preparation

The sample was structured to reflect the population as well as ensure that there were enough respondents in each broad analysis group to facilitate statistical analysis. The sample was stratified by state and location with quota targets applied on age and location. A sex quota was not imposed, however the plan was to manage the ratio during fieldwork to ensure that the final outcome was no greater than 60:40 female:male (the final ratio was 55:45 female:male).

Table 1. Interviews achieved by quota cell

| Sex | Age | Total | SYD | NSW/ ACT | MEL | VIC | BRIS | QLD | ADEL | SA/ NT | PERTH | WA | TAS |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Male | 18-24 | 74 | 12 | 13 | 27 | 3 | 8 | 4 | 3 | 0 | 3 | 0 | 1 |

| Male | 25-34 | 157 | 40 | 17 | 33 | 7 | 22 | 11 | 7 | 3 | 11 | 3 | 3 |

| Male | 35-49 | 194 | 55 | 29 | 34 | 12 | 11 | 19 | 6 | 7 | 11 | 5 | 5 |

| Male | 50+ | 245 | 51 | 36 | 24 | 22 | 20 | 25 | 20 | 10 | 24 | 7 | 6 |

| Female | 18-24 | 91 | 15 | 10 | 29 | 1 | 8 | 11 | 4 | 0 | 10 | 3 | 0 |

| Female | 25-34 | 222 | 45 | 29 | 50 | 11 | 24 | 25 | 8 | 5 | 14 | 5 | 6 |

| Female | 35-49 | 232 | 50 | 30 | 39 | 14 | 18 | 27 | 12 | 10 | 9 | 12 | 11 |

| Female | 50+ | 288 | 47 | 46 | 34 | 20 | 24 | 28 | 30 | 13 | 23 | 10 | 13 |

| Total | 1503 | 315 | 210 | 270 | 90 | 135 | 150 | 90 | 48 | 105 | 45 | 45 | |

The sample was drawn from the electronic White Pages allowing 60 numbers for each completed interview (90 000 in all). These numbers were checked for duplicates and missing digits which reduced the usable starting sample by about 5%. This list was washed against Wallis’ internal Do-Not-Call List and a couple of numbers were removed. Following these processes, 84 157 numbers were available for use in the study, although not all numbers were used in the course of interviewing (see Field Statistics for more details).

2.0 Survey conduct

2.1 Questionnaire set-up and testing

Once the questionnaire had been approved by the Office to proceed to pilot testing, it was set up on Wallis’ CATI system and subjected to the following checking process:

- Wording, skips and routing were checked in hard-copy format.

- The questionnaire was checked by the analyst who set it up.

- The consultant checked the main skips by comparing top-line results from test interviews against the paper questionnaire.

- ‘Dummy’ interviews were conducted using automatic computer generated responses, and the top-line data from these were investigated to ensure all questions had the correct number of responses.

- A final test was run by a member of the fieldwork team prior to briefing and ‘going live’.

2.2 Pilot study

The pilot study was conducted as an exact copy of the main survey. This consisted of 22 interviews, two (one male and one female) in each of the eleven locations used to identify Australian regions and to be used to control quotas. This structure was used to test that the quotas were working correctly.

5 interviewers received a one-hour briefing on 28 June prior to conducting a test run and then live interviews. The interviews were completed on the evening of 29 June and interviewers were then debriefed.

The average duration of the questionnaire during the pilot was 30.6 minutes and a strike rate of 0.83 was achieved. This was clearly too long and the design procedures described earlier were introduced in order to shorten the length of the questionnaire.

There being no other changes to the questionnaire, the pilot study interviews were included with interviews from the main survey.

2.3 Main study

A further 1481 interviews (making a total of 1503) were completed between 11 July and 7 August 2007. In addition to the interviewers who worked on the pilot study, 69 interviewers were briefed for the task and the interview was administered in exactly the same way as the pilot study.

2.3.1 Interviewers and field staff briefing

Interviewers, supervisors and senior field staff attended briefing sessions conducted by the Project Director or Project Manager. A total of 74 interviewers were briefed, as well as 5 supervisors. The Field Manager did not attend formal briefings but was briefed informally and attended progress meetings throughout the fieldwork.

Although a ‘hard’ copy of the questionnaire was available to interviewers, our briefing facilities allowed a projected image of a ‘dummy’ test interview to be used. The briefing session was interactive and interviewers took an active part in asking and answering questions as they were displayed on screen. This is much closer to the interviewers’ real experience of the questionnaire than is the hard copy version.

Interviewers were able to ask questions and provide comment as they saw fit. Following the briefing interviewers conducted test interviews using an exact copy of the live questionnaire version on the CATI system to familiarise themselves with the practical use of the questionnaire before they conducted any live interviews.

In addition to briefing interviewers about the background of the study and its conduct, interviewers were briefed so that they could answer the following questions appropriately:

Q. How did you get my telephone number?

A. Your number was selected at random from the electronic White Pages.

Q. How do I know that my answers will remain confidential?

A. We will separate your telephone number, and any other identifiable information, from your answers to the survey as soon as the survey is complete. We will keep a record that we contacted this number for a period of six weeks and then delete the record.

Q. What will you do with my information?

A. Your answers, along with those of other respondents, will form the basis of a report submitted to the Office of the Privacy Commissioner. All analysis will be done on aggregated results – that is groups of people rather than individuals.

Interviewers were also given contact phone numbers for the Office in addition to the standard industry information line and reference to Wallis’ website. More information about calls to these numbers is given in section 2.3.5.

2.3.2 Auditing and quality control

A total of 271 (18%) interviews were monitored by senior field staff on the CATI system; they could hear the call as well as see how interviewers were recording responses. Of those:

- 150 (10%) were monitored for a period less than 75% of the entire interview length; and

- 121 (8%) were validated by listening to more than 75% of the entire interview.

No issues with the survey were encountered through this process.

2.3.3 Security access control and privacy measures

Although no information that could personally identify individual respondents was captured in the course of this survey, Wallis often conducts such studies and has the requisite security in place.

As a primary security measure, Wallis has separated its day-to-day office network, which is accessible via e-mail and Internet, from its field operations. Identified information is only stored on the field system. The only identifying record captured in this survey was a household telephone number.

2.3.4 Call protocols

The Office had particular guidelines which were followed in the conduct of the survey. They were:

- No calls to be made on a Sunday unless by respondent request.

- Calls to be made within the hours of 9.00 am to 8.30 pm local time Monday to Friday and 9.00 am to 5.00 pm on Saturdays.

- If no contact had been made with a household after trying the number five times, no further attempt was made to contact anyone on that number.

- Appointments were only made if they were firm appointments. Interviewers did not make appointments without confirming a time with the respondent.

It should also be noted that Wallis does not leave messages on answering machines unless specifically requested to do so. In the conduct of this survey no messages were left on answering machines.

2.3.5 Calls to information numbers

For this study, respondents were given the option of checking on the bona fides of Wallis though the AMSRS industry information line or the company website. In addition, the survey bonafides could be checked via the Office’s 1300 number or its website.

2.3.5.1 AMSRS SurveyLine (1300 364 830)

As Wallis is a member of the Association of Market and Social Research Organisations (AMSRO) and its consultants are members of the professional body, the AMSRS, respondents were directed to the industry hotline if they wished to check on the bona fides of Wallis or the conduct of the survey. The hotline is manned 24 hours a day. All calls, their nature and, if necessary, the resolution of them are logged. For this survey the AMSRS officer noted that there were no calls to the survey line relating to Wallis or the topic of privacy during the interviewing period.

2.3.5.2 The Office (1300 363 992)

The Office received three calls to its 1300 number, all related to the bona fides of the survey.

2.3.5.3 Wallis’ Website (www.wallisgroup.com.au)

Respondents were provided with Wallis’ website address. Surveys that are in field are logged on a page of this website (with ongoing and large-scale studies being given their own pages as required). This study did not receive its own page, but the following listing for the duration of the survey. We are unable to say how many people looked at the specific details of the survey on the website.

Community Attitudes towards Privacy: The Office of the Privacy Commissioner

Similar studies have been carried out at regular intervals since 1990 in order to measure changes in public attitudes towards privacy-related concerns. This study investigates people’s views about the way their privacy is handled in a range of areas including: health; work; business; and government. It asks respondents about such topics as privacy laws, ID theft, CCTV as well as the extent to which they trust organisations of different types. The Privacy Commissioner will use the results to suggest appropriate changes to privacy legislation for a review being carried out by the Australian Law Reform Commission.

1500 interviews will be completed with a representative sample of the adult Australian population. Interviews will be conducted from the 11th to the 21st July.

To check on these or any other surveys, please call AMSRS SurveyLine on 1300 364 832 or Wallis Consulting Group (03) 9621 1066.

2.4 Verification study

A small study was conducted concurrently to ensure that responses to questions in the main survey were accurate and representative of the broader community. Concerns had been raised in the past that contextual bias could enter the questionnaire as respondents were primed by previous questions to provide answers that may not have reflected their view when asked questions in isolation.

Three questions were chosen from the main survey to be added to NewsPoll’s Omnibus, a multi-client survey, between 3 and 7 August 2007. The sampling structure of the Omnibus was similar to that used for the main survey and 1200 Australians over 18 years of age were interviewed by telephone. Full details of this study are reported in Appendix 1.

3.0 Field statistics

3.1 Response rate

Tables 2 and 3 below outline the field statistics for the 2007 survey. The response rate for the survey was low with only one in twenty eligible respondents completing an interview. The principal reasons for this were:

The length of the survey – respondents were told that the survey would take 20 to 30 minutes to complete and many were unable to spare that amount of time. This was particularly true of the younger age groups. The effective response rate was calculated from interviews achieved versus the telephone numbers in scope, excluding those who were ineligible (1503/28 262).

The high number of younger respondents required to meet quota targets meant that some older willing respondents were not interviewed. Prior to quotas filling the response rate was much higher, as all willing respondents were able to participate.

The effective response rate thus differs markedly from the co-operation rate. This measure compares the number of in-scope respondents who were willing to participate (7188) to the total number interviewed (1503) and was 22% or similar to other population surveys conducted by Wallis.

While the low response rate is a concern, the characteristics of the final sample match the known characteristics of the Australian population well, therefore limiting the potential for sample bias and eliminating the need for substantial weighting to be applied to make the results representative. The differences are elaborated on in section 4.0.

The results of the verification study also match the main study closely suggesting that there was no notable sample bias.

Although statistics are not collected as to the reasons why respondents do not wish to participate, anecdotal evidence from field management staff suggests that the length of the survey was the primary reason for respondent refusal. Reportedly many respondents said that they would have participated if the survey had been shorter and interviewers cited high levels of interest in the subject matter. The market research industry guidelines on telephone interviewing length suggest that a maximum average interview length of 20 minutes, is appropriate where a prize or incentive is not offered (up to 40 minutes with a prize or incentive). These guidelines are based on known industry response rates and evidence from surveys of non-response (see www.yourviewscount.com.au).

Table 2. Field Statistics[2] — Total attempted contacts

| Total sample prepared | 84,157 |

| Used phone numbers | 57,516 |

| Unused sample (no call attempts) | 26,641 |

In scope | |

|---|---|

| Interviews | 1,503 |

Respondent not available in survey period | 554 |

Stopped interview - not completed | 21 |

Refusals (total) | 26,184 |

Ineligible/ Does not qualify | 5,131 |

| Total in scope | 33,393 |

Out of scope | |

Business/ workplace number | 765 |

FAX | 1,080 |

Number disconnected | 9,098 |

| Total out of scope | 10,943 |

| Total Resolved Contact Attempts | 44,336 |

Change phone number (Some respondents made an appointment to be called on a different number to the one in the sample. The outcomes of these calls are included in the ‘In-scope’ statistics): 11

Called 5 times - no answer on last attempt | 6,439 |

No answer | 4,153 |

Answering machine | 2,238 |

Busy/Engaged | 350 |

| Total Unresolved Contact Attempts | 13,180 |

In total, the automatic dialler system made 118 581 call attempts to the 57 516 numbers used. The dialler stopped 66 933 calls without need to pass them to an interviewer because the system detected that the number was out of service, not being answered or was busy/engaged. Out of service numbers were discarded from the sample, but other numbers were called again up to a maximum of five times before being discarded. Calls that were detected as being connectable were passed to an interviewer. There were 51 648 such calls. Many of these calls resulted in connections to facsimile or answering machines and were terminated manually by interviewers. Table 2 shows the combined results of the call outcome statistics produced by the automatic dialling system and the interviewing system for the 57 516 numbers used. Table 3 details the outcomes of each call attempt.

Table 3. Field Statistics — Total calls made

Total calls made: 118,581

Interview | 1,503 |

Answering machine | 12,308 |

Respondent not available during survey period | 554 |

Business/ workplace number | 765 |

Refused - household | 24,484 |

Refused - selected respondent | 1,700 |

Ineligible/Does not qualify | 5,131 |

FAX | 1,080 |

Appointment | 4,018 |

Change phone number | 11 |

Stopped interview | 94 |

| Total interviewer handled calls | 51,648 |

Dialer - Busy | 3,238 |

Dialer - No answer | 54,575 |

Dialer - Site out of service | 9,120 |

| Total dialer handled calls | 66,933 |

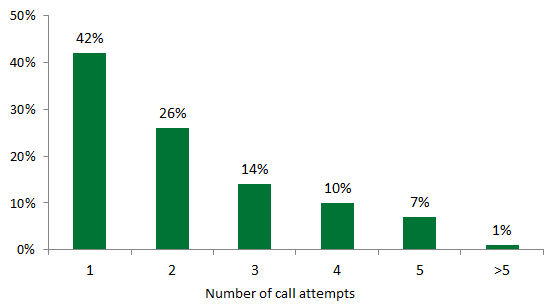

Chart 1. Interviews achieved by number of calls to number

Chart 1 bears testimony to the wisdom of the Office’s policy on restricting the number of calls to be made to each number in order to make contact to 5. 68% of interviews were achieved on the first or second call attempt. A small number of contacts were made on the sixth attempt. These were made where the fifth attempt resulted in a contact and a hard appointment being made for an interview at a later time.

4.0 Post survey data management

4.1 Preparation of the data

4.1.1 Data Analysis

Following the completion of interviewing, data analysts prepared a set of topline findings from which table specifications were formulated. A data specification request was prepared for the Data Analyst. They also reviewed the data file to ensure that it was complete and that answers were logical. Verbatim responses were removed from the data file and sent to be coded and re-input, in numeric form, back into the data file later.

In this case it had been agreed with the Office that all key demographic variables, namely age, location (state and metropolitan/non-metropolitan), sex, occupation, socio-economic standing, household income and education level, would be analysed. The topline findings guide the way in which analysis is conducted. For example, sex has only two possible answers, male and female, and both were represented in sufficient numbers to be able to compare these two subgroups. However, there were 11 occupation categories – too many to compare each to the other with any level of statistical veracity. For data analysis purposes these 11 categories were collapsed into 6 main categories. A set of detailed cross tabulations and a data file have been prepared.

4.1.2 Coding

Verbatim responses are assigned codes based on codeframes developed by the Project Director, Project Manager and the Coding Manager. In this case, they were based on those used in 2004 with additions as necessary. New questions were assessed to determine the key number of responses by major themes and these were approved by the Office for use by the coding team.

4.2 Weighting of the survey data

4.2.1 Profile of Respondents

Table 4 shows unweighted survey response data compared with the characteristics of the adult Australian population from the Australian Bureau of Statistics 2006 Census, to which they were weighted for age, sex and location. Note that 2001 Census data is shown for comparative purposes for occupation and education as 2006 data is not yet available.

Table 4. Respondent characteristics unweighted and weighted

| Sex | Unweighted Sample n=1503 % | Weighted to ABS Population Census n=15 090 000 % |

|---|---|---|

| Male | 45 | 49 |

| Female | 55 | 51 |

| Household income | Unweighted Sample n=1503 % | Weighted to ABS Population Census n=15 090 000 % |

|---|---|---|

| Less than $25,000 | 16 | 19 |

| $25,000 - $75,000 | 35 | 40 |

| $75,000 - $100,000 | 13 | 13 |

| Over $100,000 | 18 | 16 |

| Refused | 19 | 11 |

| Occupation | Unweighted Sample n=1503 % | Weighted to ABS Population Census n=15 090 000 % |

|---|---|---|

| Upper White Collar | 37 | 39 |

| Lower White Collar | 40 | 38 |

| Upper Blue Collar | 8 | 12 |

| Lower Blue Collar | 8 | 8 |

| Don’t know/other/refused | 7 | 2 |

| Education | Unweighted Sample n=1503 % | Weighted to ABS Population Census n=15 090 000 % |

|---|---|---|

| Up to Year 12 | 38 | 53 |

| Diploma/Trade | 25 | 16 |

| Degree of higher | 36 | 19 |

| Don’t know/other | 1 | 11 |

[*]NOTE: ABS data is not available from a single source, these figures are derived from two sources

Although there were no quotas applied to household income, the distribution is similar to that shown in ABS 2006 Census data. The distribution in the sample is slightly skewed towards higher incomes and the refusal rate was 8% higher than non-reported or partially reported incomes. This is unlikely to lead to sample bias as the skew is very slight. Furthermore, 19% of respondents refused to answer the question in the survey and 11% refused to give their income details to the Australian Bureau of Statistics. As a result, it is impossible to tell what the actual distribution of household income is. However, in comparison to 2001 and 2004, a lower refusal rate was obtained in the survey, thus the income of a higher proportion of respondents is known.

Occupations were skewed towards professionals and managers (upper white) and away from skilled and semi-skilled occupations. However in aggregate, there is a good match between the categories.

Education level groups are captured slightly differently from previous surveys. The major difference is that respondents who completed up to and including Year 12 have been grouped together. Within this group attitudes are similar and contrast with people educated to other education levels. It is difficult to make accurate comparisons with the Australian public as the Australian Bureau of Statistics has not collected directly comparable data until 2006 and this data was not available at the time of writing this report. However, combining data on schooling level with education level overall, it seems that the sample is skewed quite heavily towards respondents with a degree or higher level of education.

4.3 Sample variance

The sample variation at the national level (n=1503) is between 1.1% and 2.5%. This means that there is a 95% chance that if the survey was replicated and all things were equal, the results for any measure would fall within ± 2.5% of the survey estimate.

Throughout this survey comments have been made on results that are significant at the 95% confidence level. This is, in 95 out of 100 cases, the result would be within the expected range for the results shown. As a guide, to be statistically significant the percentage differences for the major analytical subgroups are:

- Age ± 6 – 9%

- Sex ± 5%

- State ± 7 – 17%

- Education level ± 6 – 7%

- Income ± 7 – 10%

The base sizes shown throughout the report are the actual number of respondents interviewed. The data on which the results are based are weighted.

5.0 Difficulties encountered, observations and recommendations

Generally speaking, the survey went to plan. There were several exceptions relating to the questionnaire itself, filling the quotas, the mode of interviewing and the timeline allowed, especially for reporting. We elaborate on these difficulties further here and offer some thoughts as to ways in which these could be minimised in future studies.

5.1 The questionnaire

5.1.1 Questionnaire length

The key problem with the questionnaire is its length. While it averaged just over 27 minutes, some interviews lasted for nearly 45 minutes. In some ways this is testament to the interest shown by respondents. As the field statistics show, only 21 people started the interview but failed to complete it and not all of these for reasons of length. Once respondents had agreed to interview having been told exactly how long it would take, they were committed to the task at hand.

The subject matter is clearly not a problem. If it were one would expect the quality of response to deteriorate as the interview progresses. There is no evidence for this and the level of refusal and “don’t know” responses did not increase throughout the interview. In fact the reverse was true with 92% of respondents claiming to be aware of closed-circuit television and answering the subsequent questions about CCTV cameras – compared with only 70% in the Verification Study.

Having said this, the response rate was very low and this was directly related to respondents realising how much time they needed to give to participate – more time than most of them had available in the early to mid evening or during the day on Saturday.

As mentioned earlier, the market research industry guidelines on interview length recommend a maximum telephone interview length of 20 minutes where a prize or incentive is not offered and 40 minutes where a prize or incentive is offered. These guidelines are based on much academic research that demonstrates that respondent goodwill falls dramatically after 20 minutes of questioning on the telephone on average. It is possible that offering an incentive might increase response rates. We considered this idea, however rejected it because it was the wish of the Office that interviews should be totally anonymous – offering a prize or other incentive requires personal details in the form of a name and contact address to be captured.

It is also difficult to find an appropriate incentive for a project such as this one. Incentives usually consist of such items as games of chance (entry into a prize draw, a scratch and win ticket, etc.) or cash or kind. In both cases, not only does the actual choice of incentive become important, but also the administration adds substantially to the cost.

We believe a better way would be to modularise the questionnaire. This could be achieved in one of two ways.

A core set of questions could be asked of all respondents and other topics could be allocated according to a rotation plan so that enough respondents answer questions on each topic to answer them reliably. If adopting this approach we would recommend using questions that vary by subgroup as the core, so that the maximum number of interviews is achieved for these and rotating modules that Australians have unanimous views about.

All question modules could be rotated.

The drawback to this plan is that the rotation must be structured to allow enough people to answer each question module to provide robust answers. This may mean increasing the size of the sample. Nonetheless, if a questionnaire can be developed that takes no more than 20 minutes to complete, we believe that the additional costs involved in increasing the sample may well be offset by the improvement in response rate. Further, while the industry is adopting the 20-minute rule as a guideline at present, there are moves to mandate this in the near future. Companies that are members of AMSRO, as most credentialed research companies are, will be bound to comply. Thus paying incentives for telephone interviews that average over 20 minutes is likely to become compulsory before the Office conducts its next survey.

5.1.2 Question structure

Several of the questions in the questionnaire allowed respondents to offer a multiple response when a single response would make more sense. For the sake of comparability with the past, Wallis and the Office asked one question in the same fashion in 2007 as 2004, and two questions where the response codes had been altered allowed multiple responses, that had previously been asked as single responses on a different answer set. The following questions would benefit from allowing a single response only (the questionnaire appears in Appendix 2 and the question numbers below correspond to this questionnaire). We have also suggested some wording changes to make them easier for respondents to answer.

Q7. Which of the following statements BEST describes how you generally feel when organisations that you have NEVER DEALT WITH BEFORE send you unsolicited marketing information? Would you say… (READ OUT)

Allow only a single response – currently (and in 2001 and 2004) a multiple response is allowed and some people profess both to being angry and annoyed when they receive the material as well as feeling concerned about where they obtained my personal information

Q22. When do you think your doctor should be able to share your health information with other doctors or health service providers such as (ROTATE: pharmacists, specialists, pathologists or nurses)?

Responses to this question, which was established as a multiple response question, show that different health professionals are regarded differently, prompting some respondents to accept information sharing between their doctor and some of the named professionals but not others. We recommend using a single response and removing the descriptor (i.e. ROTATE; pharmacists, specialists, pathologist or nurses), allowing respondents to talk in general terms about whether in principle they believe their doctor should discuss their details with health professionals that might be relevant to their particular health problem.

Q29a I’m going to read you three statements. For each could you tell me if you think it’s appropriate behaviour for an employer to do whenever they choose, only if they suspect wrong-doing, for the safety and security of all employees, or not at all?

Responses suggest that respondents interpreted this question in different ways, with some considering the advantages of an employer having surveillance in public places and to monitor people entering and exiting a building, that is, for the safety and security of staff, whereas others were considering the privacy implications of having surveillance equipment monitoring employee performance. These two themes caused the question to be answered in more than one way by several respondents, hence the total responses did not add to 100. It would be preferable to state the exact circumstances more clearly. For example, 29a might become:

(a) “Use surveillance equipment such as video and audio cameras to monitor all activities in the workplace/ parts of the workplace where the public has access, etc

And (b) “finally, do you think it’s appropriate behaviour for an employer to monitor telephone conversations…(READ OUT) whenever they choose, only if they suspect wrong-doing, for training or quality control purposes or not at all?”

Respondents are used to phone calls with frontline staff being monitored for quality control and training purposes and support this. Depending on what this question is meant to capture it might be better to be more specific about the nature of the call being made for example, to exclude frontline service staff and focus on general calls. E.g. When do you think it’s appropriate behaviour for an employer to monitor telephone conversations other than those being made to a customer services or sales officer?

5.2 Filling quotas

In an effort to capture the views of younger Australians, particularly those aged 18 - 24, a disproportionate number of interviews was completed with them. The survey results show that the 18 – 24 year age group knows the least about privacy legislation and it is safe to say they would therefore have a lower level of interest in the subject matter than other age groups. This, added to the length of the questionnaire, the difficulty in locating young people in the permissible interviewing hours, plus the increased use of mobile communications by this age group since the last survey, caused this quota to be far more difficult to fill than anticipated. This had a flow on effect to the overall response rate, as willing respondents in older age groups were not interviewed because enough interviews had already been completed with Australians in these age groups.

We do not advocate changing the quota structure. However the difficulty might be alleviated slightly by changing the introduction to the questionnaire and asking to speak with the youngest male aged over 18 in preference to others, then the youngest female.

The Office also requested that no interviewing should be conducted on Sunday in order to avoid any suggestion that the interview process was an imposition on people’s privacy and in line with the Australian Communications and Media Authority’s (ACMA) industry standard at that time. We have found that Sunday is a good day to locate younger respondents. Indeed the company provided field statistics to ACMA privately and via the AMSRO submission in support of market researchers being allowed to make unsolicited research calls on Sundays. In its final legislation, ACMA accepted the industry position and market researchers are now able to make unsolicited research calls on Sunday. While we applaud the strict calling regime enforced by the Office, we suggest that falling in line with industry standards, that is, allowing unsolicited calls to be made on Sunday, would also help in filling this quota.

5.3 Conduct of the survey – methodology

The survey has been conducted by telephone using a sample drawn at random from the electronic White Pages. In our proposal for this study we discussed other options and came to the conclusion that this method still offers the best means of gaining a representative sample of community attitudes. However we also pointed out:

The proportion of households with a connected landline is falling with households opting to use mobile technology and/or Voice over Internet Protocol (VoIP) including Skype in greater number.

Younger people in particular are the least likely to have a landline telephone with a growing percentage of those households with landlines using them only to access the Internet.

The proportion of households with Internet access and, within this, broadband connections is increasing.

Another confusing factor for this survey was the recent introduction of the telemarketing Do Not Call Register administered by ACMA. It is too early to say to what extent its introduction contributed to a disappointing response rate.

Taking these factors into account, in our opinion it would be worth considering conducting all or some of the survey online in future. The biggest challenge to moving the survey online either in part, for example simply with younger aged Australians or completely, will be access to a representative listing of the Australian population. In our opinion such lists do not exist currently. However, the situation in this field is changing rapidly and it is likely that the lists will be much improved in three years’ time.

5.4 Practical considerations – the timeline

The Office was working towards publishing the report from this study during Privacy Awareness Week (26 August – 1 September 2007). This was achieved. However, fieldwork took longer than anticipated owing to the difficulty in filling younger age quotas and this reduced the amount of time available for reporting.

The ideal timetable from the time of appointment of the consultant to the project if conducted in the same manner as this year would be:

- Questionnaire development 2 weeks

- Pilot test 1 week

- Interviewing 3 weeks

- Data preparation and preliminary analysis 1 week

- Reporting to draft stage 3 weeks

- Redrafts 2 weeks

5.5 Analysis of the survey data

In analysing survey data it became apparent that many sections of the Australian community hold similar views on a range of privacy related issues. Demographic variables alone do not always differentiate differences in opinion and it might be possible to segment the community on the basis of attitudes towards privacy. The statistical techniques that are usually used to effect market segmentation (typically factor analysis and cluster analysis) require question answers to be scaled, for example, extent of agreement (strongly agree, partly agree, neither agree or disagree, partly disagree, strongly disagree), rather than binary (eg yes or no).

Many of the survey questions are asked in a suitable manner to support these analyses, however some related questions are not. An analysis could be done on the existing data set, however, if segmentation analysis is considered to be useful, we recommend reviewing and revising the questions with this purpose in mind when the survey is conducted again.

Appendix 1: Verification Study

A Verification Study was conducted to ensure that responses to questions in the main survey were accurate and representative of the broader community. Concerns had been raised in the past that contextual bias could enter the questionnaire as respondents were primed by previous questions to provide answers that may not have reflected their view when asked questions in isolation.

The Verification Study consisted of three questions from the main survey. It was conducted as part of NewsPoll’s Omnibus, a multi-client survey, between 3 and 7 August 2007. The sampling structure of the Omnibus was similar to that used for the main survey and 1,200 Australians over 18 years of age were interviewed by telephone.

On the whole, responses were in line with the results of the main study except for the question on awareness of CCTV. This question was included because the following question on concerns about the use of CCTV in the main survey had only been asked of those who were aware of CCTV. There was a 22% discrepancy, with respondents of the Verification study (70%) being much less likely to be aware of CCTV than in the main survey (92%). One explanation for this is that respondents to the main survey answered the CCTV section last and were, by that point, quite attuned to privacy issues. In particular the ‘privacy in the workplace’ section had already asked about surveillance equipment. Also the introduction to the CCTV section was more detailed than the brief introduction in the verification study. The introductions were as follows:

Main survey

The last topic I’d like your opinions on is Closed Circuit Television (CCTV). I’m talking about cameras that are used to monitor PUBLIC SPACE for example inner city streets, parks and car parks. Are you aware of or have you seen CCTV cameras?

Verification survey

Thinking now about Closed Circuit Television, also know as CCTV Are you aware of or have you seen CCTV cameras?

With this exception, responses fell within the expected range of sampling error, including those relating to concern about the use of CCTV cameras.

Concern about personal information being sent overseas

Q. How concerned are you about Australian businesses sending their customers’ personal information overseas to be processed?

| Response | Privacy Survey 2007 % | Verification Study (NewsPoll Omnibus) % | Difference % |

|---|---|---|---|

| Very Concerned | 63 | 66 | 3 |

| Somewhat Concerned | 27 | 23 | -4 |

| Not concerned | 9 | 10 | 1 |

| Don’t know | 1 | 1 | 0 |

Have been or know someone who has been the victim of identity theft or fraud

Q. Now I’d like to ask you about identity fraud. By identity fraud and theft I mean where an individual obtains your personal information such as credit card, driver’s licence, passport or other personal identification documents and uses these to obtain a benefit or service for themselves fraudulently. Have you, or someone you personally know, ever been the victim of identity fraud or theft?

| Response | Privacy Survey 2007 % | Verification Study (NewsPoll Omnibus) % | Difference % |

|---|---|---|---|

| Yes, you | 9 | 8 | -1 |

| Yes, someone you know | 17 | 14 | -3 |

| No | 75 | 78 | 3 |

| Don’t know | <1 | <1 | 0 |

Aware of CCTV cameras

Q. Thinking now about Closed Circuit Television, also know as CCTV. Are you aware of or have you seen CCTV cameras?

| Response | Privacy Survey 2007 % | Verification Study (NewsPoll Omnibus) % | Difference % |

|---|---|---|---|

| Yes | 92 | 70 | -22 |

| No | 7 | 29 | 22 |

| Don’t know | <1 | 2 | 0 |

Concern about the use of CCTV cameras

Q. How concerned are you about the use of CCTV cameras in public spaces? Are you…?

| Response | Privacy Survey 2007 % | Verification Study (NewsPoll Omnibus) % | Difference % |

|---|---|---|---|

| Very Concerned | 3 | 5 | 2 |

| Somewhat Concerned | 11 | 12 | 1 |

| Not concerned | 85 | 83 | -2 |

| Don’t know | <1 | 1 | <1 |

Appendix 2: Questionnaire

Wallis Consulting Group – Office of the Privacy Commissioner

2007 COMMUNITY ATTITUDES RESEARCH

FINAL QUESTIONNAIRE – 5th July

Good [Morning/ Afternoon/ Evening], my name is (SAY NAME) from Wallis Consulting Group. Today we are conducting an important survey on behalf of the Office of the Privacy Commissioner on the protection and use of people’s personal information by businesses and other organisations. All views are of interest to us and results may be used to help better protect consumers’ privacy in the future. Your answers will be strictly confidential and used as statistics only. The interview will take between 20 and 30 minutes on average depending on your answers and this is your chance to have your say on matters relating to privacy.

To ensure we speak to a representative sample of the population, we would like to speak with someone in the household aged 18 years or over.

IF NOT A CONVENIENT TIME NOW MAKE APPOINTMENT

IF ASKS HOW DID YOU GET MY NUMBER, SAY: Your number was selected randomly from the white pages phone book.

IF RESPONDENT WANTS FURTHER INFORMATION, SAY: You can find out more about this survey from our website (www.wallisgroup.com.au) or you may contact the Office of the Privacy Commissioner on 1300 363 992, during business hours.

This call may be monitored for quality control purposes. Is that OK with you?

- Yes1

- No2 Mark accordingly

We’d prefer that you answer all the questions, but if there are any that you don’t want to answer, that’s fine, just let me know.

S1. Sex. Record sex of respondent

- Male1

- Female2

S2. Before we begin, to ensure we are interviewing a true cross-section of people, would you mind telling me which of the following age groups you belong to? (READ OUT)

- 18-24 1

- 25-29 2

- 30-34 3

- 35-44 4

- 45-49 5

- 50-54 6

- 55-64 7

- 65+ 8

- (DON’T READ) REFUSED 9 Terminate

Check quotas

Main questionnaire

General attitudes to providing personal information

Q1. Firstly, have you ever decided NOT TO DEAL with a PRIVATE COMPANY or CHARITY because of concerns over the protection or use of your personal information?

- Yes 1

- No 2

- Can’t say 3

Q2. Have you ever decided NOT TO DEAL with a GOVERNMENT DEPARTMENT because of concerns over the protection or use of your personal information?

- Yes 1

- No 2

- Can’t say 3

Q3. When completing forms or applications that ask for personal details, such as your name, contact details, income, marital status etc, how often, if ever, would you say you leave some questions blank as a means of protecting your personal information? Would that be …(READ OUT)?

- Always1

- Often2

- Sometimes3

- Rarely4

- Never5

- Can’t say6

Q4. When providing your personal information to any organisation, IN GENERAL, what types of information do you feel RELUCTANT to provide? [IF NECESSARY For example, (ROTATE) your name, address, phone number, financial details, income, marital status, date of birth, email address, medical information, genetic information, or something else] What else?(MULTI)

If more than one

Q5. And of [LIST ANSWERS IN Q4] which ONE of these do you feel MOST RELUCTANT to provide? (SINGLE)

- Name 1

- Home Address 2

- Home phone number 3

- Financial details such as bank account 4

- Details about your income 5

- Marital status 6

- Date of Birth 7

- E-mail address 8

- Medical history/health information 9

- Genetic information 10

- Religion 11

- How many people or males in household/family member details 12

- Other (Specify) 97

- CAN’T SAY/ IT DEPENDS 98

- None of these 99

If more than one response on Q4, ask:

If mentioned type of information, or depends on type of information (codes 1 to 98 on Q3), ask:

Q6. And what is your MAIN reason for not wanting to provide your [ANSWER FROM Q5]?

- May lead to financial loss/people might access bank account 1

- It’s none of their business/Invasion of privacy 2

- Discrimination 3

- I do not want to be identified 4

- I do not want people knowing where I live or how to contact me 5

- The information may be misused 6

- Information might be passed on without my knowledge 7

- Don’t want junk mail/unsolicited mail SPAM 8

- I don’t want to be bothered/hassled/hounded by phone or door to door 9

- For safety/security/protection from crime) 10

- Unnecessary/irrelevant to their business or cause 11

- Other (SPECIFY) 97

- Can’t say 98

Ask everyone

Q7. Which of the following statements BEST DESCRIBES how you GENERALLY feel when organisations that you have NEVER DEALT WITH BEFORE send you unsolicited marketing information? Would you say...(READ OUT) (MULTI)?

- I feel angry and annoyed1

- I feel concerned about where they obtained my personal information2

- It doesn’t bother me either way, I don’t care3

- It’s a bit annoying but it’s harmless4

- I enjoy reading the material and don’t mind getting it at all5

- Fixed openend or something else (SPECIFY)97

- Fixed Single (DON’T READ) CAN’T SAY98

Trust in organisations handling personal information

The next few questions concern the type of public information that should or should not be available to businesses for marketing purposes.

Q8. How trustworthy or untrustworthy would you say the following organisations are with regards to how they protect or use your personal information? IF TRUSTWORTHY: Is that highly trustworthy or somewhat trustworthy? IF UNTRUSTWORTHY: Is that highly untrustworthy or somewhat untrustworthy?

| ROTATE | Highly Trustworthy | Somewhat Trustworthy | Neither (DNR) | Somewhat untrustworthy | Highly untrustworthy | Can’t say |

|---|---|---|---|---|---|---|

| a) Financial institutions | 1 | 2 | 3 | 4 | 5 | 6 |

| b) Real Estate Agents | 1 | 2 | 3 | 4 | 5 | 6 |

| c) Insurance Companies | 1 | 2 | 3 | 4 | 5 | 6 |

| d) Charities | 1 | 2 | 3 | 4 | 5 | 6 |

| e) Government Departments | 1 | 2 | 3 | 4 | 5 | 6 |

| f) Health service providers including doctors, hospitals and pharmacists | 1 | 2 | 3 | 4 | 5 | 6 |

| g) Market research organisations | 1 | 2 | 3 | 4 | 5 | 6 |

| h) Retailers | 1 | 2 | 3 | 4 | 5 | 6 |

| i) Businesses selling over the internet | 1 | 2 | 3 | 4 | 5 | 6 |

ROTATE 9 and 9b

Q9. GENERALLY, how likely or unlikely would you be to provide your personal information to an organisation if it meant you would receive discounted purchases? Is that very or quite…

AND

Q9b. and how about if it meant you would have a chance to win a prize? Is that very or quite…

- Very likely1

- Quite likely2

- Neither likely or unlikely (DO NOT READ)3

- Quite unlikely4

- Very unlikely5

- Can’t say (DO NOT READ)6

- Depends (DO NOT READ)7

Level of knowledge

The next few questions are about the Federal Privacy Act and what you believe is covered by it.

Q10. Firstly, I’m going to list six types of organisations. Which of these, if any, do you think GENERALLY must operate under the Federal Privacy Act? (MULTI)

- State Government departments1

- Commonwealth Government departments2

- Small businesses3

- Large businesses4

- Charities5

- None of them6

- Businesses based overseas7

Q11. Which of the following activities, if any, would be against the Federal Privacy Act? (RANDOM)

- Your neighbours spying on you1

- An individual steals your ID and uses it to pretend that they are you2

- A small business reveals a customer’s information to other customers3

- A large business reveals a customer’s information to other customers4

- A bank or other organisation sends customer data to an overseas processing center5

Q12. Were you aware of the Federal PRIVACY LAWS before this interview?

- Yes1

- No2

- Can’t say3

Q13. If you wanted to report the misuse of your personal information, who would you be most likely to contact? (DO NOT READ OUT) Anyone else? (MULTI)

- Police1

- Ombudsman2

- The organisation that was involved3

- The Privacy Commissioner (Federal or State)4

- Consumer Affairs (in your state)5

- Local State MP6

- State government department7

- Local Council8

- Lawyers/solicitors9

- Department of Fair Trading10

- The media eg TV/ radio/ newspapers11

- Seek advice from a friend or relative12

- Other (SPECIFY)97

- CAN’T SAY (if none)98

Ask if Q13 code 12

Q13a Is that friend or relative a professional in a relevant field?

What is it?

- Police1

- Ombudsman2

- The organisation that was involved3

- The Privacy Commissioner (Federal or State)4

- Consumer Affairs (in your state)5

- Local State MP6

- State government department7

- Local Council8

- Lawyers/solicitors9

- Department of Fair Trading10

- The media eg TV/ radio/ newspapers11

- No12

- Other (SPECIFY)97

- CAN’T SAY (if none)98

Q14. Are you aware that a Federal Privacy Commissioner exists to uphold privacy laws and to investigate complaints people may have about the misuse of their personal information?

- Yes1

- No2

- Can’t say3

Government

The next questions cover Government Departments and privacy

Q15. If it was suggested that you be given a unique number to be used for identification by ALL Commonwealth Government departments and to use ALL government services, would you be in favour of this? Is that strongly or partly?

- Strongly in favour1

- Partly in favour2

- Neither in favour or against it (DO NOT READ)3

- Partly against4

- Strongly against5

- Can’t say (DO NOT READ)6

Q16. Do you believe government departments should be able to cross-reference or share information in their databases about you and other Australians for:

- Any Purpose1

- Some Purposes2

- Not At All3

- Can’t Say4

If some purposes (code 2 in Q16), ask, otherwise go to Q17:

Q16a. For which of the following purposes do you believe governments should be allowed to cross reference your personal information? Should they be allowed to cross-reference information for… (READ OUT)

| ROTATE | Yes | No | Don’t know |

|---|---|---|---|

| Updating information like contact details | 1 | 2 | 3 |

| To prevent of solve fraud or other crime | 1 | 2 | 3 |

| To reduce costs or improve efficiency | 1 | 2 | 3 |

Ask everyone

Q17. Which of the following instances would you regard to be a misuse of your personal information?

| ROTATE | Yes (invasion of privacy) | No | Don’t know |

|---|---|---|---|

| a) a government department that you haven’t dealt with gets hold of your personal information | 1 | 2 | 3 |

| b) a Government department monitors your activities on the Internet, recording information on the sites you visit without your knowledge | 1 | 2 | 3 |

| c) You supply your information to a Government department for a specific purpose and the agency uses it for another purpose. | 1 | 2 | 3 |

| d) A Government department asks you for personal information that doesn’t seem relevant to the purpose of the transaction. | 1 | 2 | 3 |

Privacy and businesses

Q19. I would like you now to think about your privacy and businesses. I’m going to read you a number of statements and I’d like you to tell me whether you agree or disagree with each. Do you agree or disagree… Is that strongly or partly

| ROTATE | Strongly agree | Partly agree | Neither (DNR) | Partly disagree | Strongly disagree | Can’t say (DNR) |

|---|---|---|---|---|---|---|

| a) businesses should be able to use the electoral roll for marketing purposes | 1 | 2 | 3 | 4 | 5 | 6 |

| b) businesses should be able to collect your information from the White Pages telephone directory without your knowledge for the purposes of marketing | 1 | 2 | 3 | 4 | 5 | 6 |

Q18 Which of the following instances would you regard to be a misuse of your personal information?

| ROTATE | Yes (invasion of privacy) | No | Don’t know |

|---|---|---|---|

| a) a business that you don’t know gets hold of your personal information | 1 | 2 | 3 |

| b) a business monitors your activities on the internet, recording information on the sites you visit without your knowledge. | 1 | 2 | 3 |

| c) You supply your information to a business for a specific purpose and the business uses it for another purpose. | 1 | 2 | 3 |

| d) A business asks you for personal information that doesn’t seem relevant to the purpose of the transaction. | 1 | 2 | 3 |

Q21. How concerned are you about Australian businesses sending their customers’ personal information overseas to be processed? (READ OUT)

- Very concerned1

- Somewhat concerned2

- Not concerned3

- Can’t say4

Health information

The next few questions concern medical or health information and privacy.

Q22. When do you think your doctor should be able to share your health information with other doctors or health service providers, such as (ROTATE: pharmacists, specialists, pathologists or nurses)? (READ OUT)

- For anything to do with my health care1

- Only for purposes that are related to the specific condition being treated2

- Only for serious or life threatening conditions3

- For no purpose, they should always ask for my consent4

- Don’t know/Can’t say (DO NOT READ)5

Q23. Do you agree or disagree that…?

Your doctor should be able to discuss your personal medical details with other health professionals - in a way that identifies you - WITHOUT YOUR CONSENT if they believe this would assist your treatment? Is that strongly or partly…

- Strongly agree1

- Partly agree2

- Neither agree or disagree (DO NOT READ)3

- Partly disagree4

- Strongly disagree5

- Can’t say (DO NOT READ)6

Q24. The idea of building a National Health Information Network has been put forward. If this existed it would be an Australia-wide database which would allow medical professionals anywhere in Australia to access a patient’s medical information if it was needed to treat a patient. The information could also be used on a de-identified basis to compile statistics on the types of treatments being used, types of illnesses suffered and so on…

If such a database existed, do you think inclusion of your medical information should be VOLUNTARY, or should ALL MEDICAL RECORDS be entered without permission or consent?

- Inclusion should be voluntary1

- All medical records should be entered2

- Other (SPECIFY)97

- CAN’T SAY98

Q25. Health information is often sought for research purposes and is generally de-identified - that is, NOT linked with information that identifies an individual. Do you believe that an individual’s permission should be sought before their de-identified health information is released for research purposes, or not?

- Yes1

- No2

- Maybe3

- Can’t say4

Q26. If a person has a serious genetic illness, under what circumstances do you think it is appropriate for their doctor to tell a relative so the relative could be tested for the same illness: Should doctors tell their relatives… (SINGLE) (READ OUT)

- Without the patient’s consent, even if it’s unlikely that the relative may have the condition?1

- Without the patient’s consent, but if there is strong possibility of the relative also having the condition?2

- If the patient consents to their relative being told3

- Don’t know/ can’t say (DO NOT READ)4

Employee privacy

Now for a few questions about employees’ privacy in the workplace

Q27. Do you think that employees should have access to the information their employer holds about them?

- Yes1

- No2

- Can’t say3

Q28. I’m going to read you three statements. For each could you tell me if you think it’s appropriate behaviour for an employer to do whenever they choose, only if they suspect wrong-doing or not at all.

| ROTATE | Whenever they choose | Only if suspect wrongdoing | Not at all | Can’t say (DNR) |

|---|---|---|---|---|

| a) Read e-mails on a work e-mail account | 1 | 2 | 3 | 4 |

| b) Randomly drug and alcohol test employees | 1 | 2 | 3 | 4 |

| c) Monitor an employees work vehicle location (eg using GPS) | 1 | 2 | 4 | 4 |

Q29a. I’m going to read you another three statements. This time could you tell me if you think it’s appropriate behaviour for an employer to do whenever they choose, only if they suspect wrong-doing, only for the safety or security of employees or not at all. (SINGLE)

| ROTATE | Whenever they choose | Only if suspect wrongdoing | Safety/ Security | Not at all | Can’t say (DNR) |

|---|---|---|---|---|---|

| a) Use surveillance equipment such as video and audio cameras to monitor the workplace | 1 | 2 | 3 | 4 | 5 |

| b) Monitor everything an employee types into their computer, including what web sites they visit and what they type in e-mails | 1 | 2 | 3 | 4 | 5 |

Q29b. And finally, do you think it’s appropriate behaviour for an employer to monitor telephone conversations…?.(READ OUT).

- Whenever they choose1

- Only if they suspect wrongdoing2

- For training and quality control; or3

- Not at all4

- Can’t say (DO NOT READ)5

Q30. How important is it to you that an employer has a privacy policy that covers when they will read employee emails, randomly drug test employees, use surveillance equipment to monitor employees and monitor telephone conversations. Is it …(READ OUT)?

- Not at all important1

- Not very important2

- Quite important3

- Very important4

- Can’t say (DO NOT READ)5

Internet

Now I’d like to ask you a few questions about using the internet and giving personal information over it.

Q31. Are you more or less concerned about providing your personal details electronically or online compared to in a hard copy/paper based format? …

- More concerned1

- Less concerned2

- As concerned3

- Can’t say (DO NOT READ)4

Q32. And are you more or less concerned about providing your personal details electronically or online as opposed to over the telephone?

- More concerned1

- Less concerned2

- As concerned3

- Can’t say (DO NOT READ)4

Q33. When completing online forms or applications that ask for personal details, have you ever PROVIDED FALSE INFORMATION as a means of protecting your privacy?

- Yes1

- No2

- Can’t say3

Q34. Are you MORE OR LESS concerned about the privacy of your personal information while using the internet than you were two years ago?

- More concerned1

- Less concerned2

- As concerned3

- Can’t say (DO NOT READ)4

Q35. Do you normally read the privacy policy attached to any internet site?

- Yes1

- No2

- Can’t say3

If seen or read privacy policy (code 1 in Q35), ask, otherwise go to Q27

Q36. What impact, if any, did seeing or reading these privacy policies have upon your attitude towards the site? (DO NOT READ) (MULTI)

- It’s a good idea/ I approve of the privacy policy/ they are doing the right thing/ prefer to see on sites/ respect sites for having it1

- Feel more confident/comfortable/secure/ about using site2

- Appear more honest/trustworthy/responsible/legitimate3

- Helps me decide whether to use the site or not4

- Still apprehensive about sites that have them/Don’t trust them/ not convinced5

- Made me more cautious/aware when using the internet generally6

- Too long/complicated to read7

- Other (Specify)97

- Can’t say98

- None/no99

ID theft

I’m now going to ask you a few questions about providing photo identification and identity fraud and theft. By identity fraud and theft I mean where an individual obtains your personal information (eg. credit card, drivers licence, passport or other personal identification documents) and uses these to fraudulently obtain a benefit or service for themselves.

Q37. Do you think it is acceptable that you need to show identification documents (such as a drivers license or passport) in the following situations: (MULTI - RECORD IF ANSWER YES - acceptable)

- On entry to licensed premises (eg Pub/Club/Hotel)1

- To obtain a credit card2

- To purchase general goods (eg clothing and food)3

- To purchase goods for which you need to be over 18 eg Cigarettes4

- To get access to services5

Q38. Do you think it is acceptable that a copy of your identification documents (such as a drivers license or passport) is made in the following situations:

- On entry to licensed premises (eg Pub/Club/Hotel)1

- To obtain a credit card2

- To purchase general goods (eg clothing and food)3

- To purchase goods for which you need to be over 18 eg Cigarettes4

- To get access to services5

Q39. Have you (or someone you personally know) ever been the victim of identity fraud or theft?

- Yes – it happened to me1

- Yes it happened to someone I personally know2

- No3

- Can’t say4

Q40. How concerned are you that you may become a victim of identity fraud or theft in the next 12 months? (READ OUT)

- Very concerned1

- Somewhat concerned2

- Not concerned3

- Can’t say (DO NOT READ)4

Q41. Do you consider ID fraud or theft to be an invasion of privacy?

- Yes 1

- No 2

- Can’t say 3

Q42. What activities do you think most easily allow identity ID fraud or theft to occur?

OPEN

CCTV

The last topic I’d like your opinions on is Closed Circuit Television (CCTV). I’m talking about cameras that are used to monitor PUBLIC SPACE for example inner city streets, parks and car parks.

Q43. Are you aware of or have you seen CCTV cameras?

- Yes 1

- No 2 Go to Demos

- Can’t say 3 Go to Demos

Q44. How concerned are you about the use of CCTV cameras in public spaces, are you (READ OUT)…?

- Very concerned1

- Somewhat concerned2

- Not concerned3

- Can’t say4

Ask if concerned

Q45. What is your main concern? (DO NOT READ)

- Invasion of privacy1

- Information may be misused2

- It makes me uncomfortable3

- Other (specify)4

- Can’t say5

Q46. Which organisation or organisations, if any, do you think should have access to what has been recorded on CCTV cameras? (MULTI) (DO NOT READ)

- Everyone1

- Police2

- Anti-terrorism law enforcement agencies3

- Local Councils4

- Government5

- Security companies6

- Businesses7

- The courts8

- The organisation that installed them9

- Other (specify)10

- Can’t say11

Q47. Where is it appropriate to have CCTV cameras?. OPEN (PROBE)

Demographics

Finally, a few questions about yourself, just to ensure we have spoken to a representative cross section of people.

D1. What is the highest level of education you have reached?

- Primary school1

- Intermediate (year 10)2

- VCE/HSC (year 12)3

- Undergraduate diploma/TAFE/Trade certs4

- Bachelor’s Degree5

- Postgraduate qualification6

- CAN’T SAY7

D2. Are you now in paid employment?

IF YES, ASK: Is that FULL-time for 35 hours or more a week, or part-time?

IF NO, ASK: Are you retired or a student?

- Yes, Full-time1

- Yes, part time2

- No, retired3

- No, student4

- Other non-worker5

- Refused6

Ask if working full/part time

D3. Are you employed by someone else or are you an employer?

- Employee1

- Employer2

- Self-employed/SOHO3

- Both4

- Can’t say5

D4. What is your (last) occupation?

(OPEN – code to ANZSCO standard)

D5. Which describes your household income before tax, best?

- Less than $25,0001

- $25-75,0002

- $75 - 100,0003

- Over $100,0004

- Refused (do not read)5

Closing statements — All

Thank you very much for your time. Your views count and on behalf of the Office of the Privacy Commissioner and Wallis Consulting Group, I’m very glad you made them known. In case you missed it, my name is ............... from Wallis Consulting Group. The information you have provided cannot be linked to you personally in any way.

If you have any queries about this study you can call the Australian Market and Social Research Society’s free survey line on 1300 364 830.

Footnotes

[1] The 2001 and 2004 studies were conducted for the Office of the Privacy Commissioner by Roy Morgan Research.

[2] Resolved contact attempts are those for which an outcome was achieved, either an interview, refusal or confirmation that the phone number was not in scope/respondents were ineligible. Unresolved contact attempts are those that did not result in contact with a respondent or confirmation that the phone number was not in scope.

Note that contact attempts refer to the outcome for each individual phone number called, total calls made refers to all call attempts – that is a single phone number may have been contacted more than once.